Time series modeling with MLM

library(dplyr)

library(ggplot2)

library(tidyr)

library(nlme)

library(knitr)

# Make sure you set your working dir to the location of the csv

# setwd('C:\\Users\\flournoy\\Downloads')

aDF<-read.csv('timeseries_DanceRandom_RAW_forRClub_28Apr2015.csv')First, we’ll lengthen the data set so we get a row for every dwell time observations using tidyr::gather and some dplyr functions

We also want to know which slide number each dwell time observation is from as well as which within-element position it represents.

aDF_l<-aDF %>%

dplyr::select(-TDT,-Order) %>%

gather(slide,dt,-SubjID) %>%

mutate(slide_num=as.numeric(sub('X([0-9]+)','\\1',slide))) %>%

arrange(SubjID,slide_num) %>%

mutate(

element_position=rep(1:4,n()/4),

slide_num=slide_num-min(slide_num))

kable(head(aDF_l))| SubjID | slide | dt | slide_num | element_position |

|---|---|---|---|---|

| 10 | X18 | 0.186 | 0 | 1 |

| 10 | X19 | 0.796 | 1 | 2 |

| 10 | X20 | 0.216 | 2 | 3 |

| 10 | X21 | 0.211 | 3 | 4 |

| 10 | X22 | 0.453 | 4 | 1 |

| 10 | X23 | 0.352 | 5 | 2 |

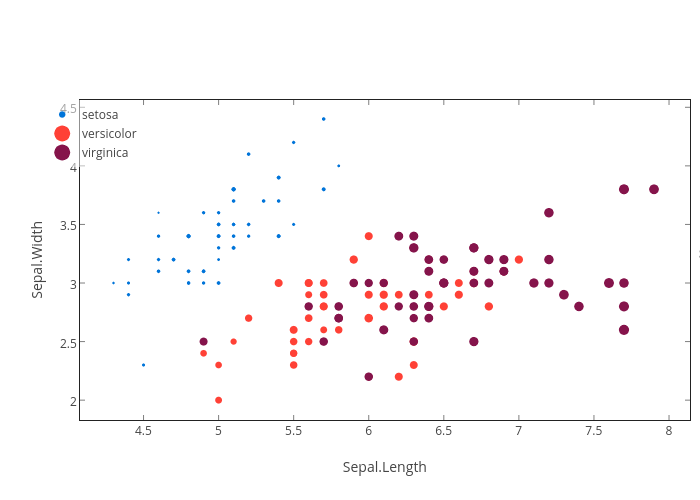

We can plot each participant’s curve dwell time curve:

ggplot(aDF_l,aes(x=slide_num,y=dt))+

geom_line(aes(group=SubjID),alpha=.05)+

coord_trans(ytrans='log10')+

geom_smooth(aes(group=NULL),method=loess,se=F)+

theme(panel.background=element_rect(fill='white'))To test autocorrelation at different lags we’re going to make an expected correlation matrix structure for the residuals. For example, for a lag-1 autocorrelation structure we’re imposing a structure such that there will be a correlation between the size of the residual for slide 0 and slide 1 that is the same as the correlation between the residual for slide 1 and slide 2.

We’re really interested in the lag 4 correlation – that is, the correlation between the residual for slide i and slide i+4. In our case, the residual will be whatever is left over after we account for the mean (i.e., intercept) and the linear trend across the entire experiment of 720 slides.

First, set our autoregressive moving average options:

lag_4_pos_by_subj<-corARMA(

value=c(0,0,0,.2), # Initial values for lag 1-4

p=4,q=0, # We want 4 lags, and 0 moving averages estimated

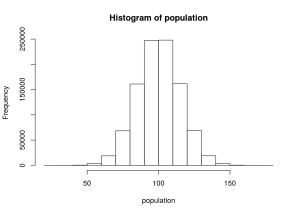

form=~slide_num|SubjID) # slide_num is our time variable, grouped by SubjIDThis uses our data to initialize a correlation matrix, which we only do for illustration purposes. nlme will do this automatically later on. The figure is what our expected residual correlation matrix looks like.

lag_4_pos_by_subj_initd<-Initialize(lag_4_pos_by_subj,data=aDF_l)

aMat<-corMatrix(lag_4_pos_by_subj_initd)

single_mat<-aMat$`10`

single_mat_l<-single_mat %>% as.data.frame %>%

mutate(y=1:n()) %>%

gather(x_col,value,-y,convert=T) %>%

mutate(

x=as.numeric(sub('V([0-9]+)','\\1',x_col)),

group=rep(1:10,each=51840))single_mat_l %>%

filter(value > .01) %>%

ggplot(aes(x=x,y=y))+

geom_tile(aes(fill=(value)))+

# facet_wrap(~group,scales='free',shrink=T) +

theme(

line=element_line(color='white'),

panel.background=element_rect(fill='white'))Now we can build and compare our models. We’ll test models with lag-1, -2, -3, and -4 AR structures.

Create our different residual correlation matrices:

lag_1_pos_by_subj<-corARMA(

value=c(0),

p=1,q=0,

form=~slide_num|SubjID)

lag_2_pos_by_subj<-corARMA(

value=c(0,0),

p=2,q=0,

form=~slide_num|SubjID)

lag_3_pos_by_subj<-corARMA(

value=c(0,0,0),

p=3,q=0,

form=~slide_num|SubjID)

lag_4_pos_by_subj<-corARMA(

value=c(0,0,0,0),

p=4,q=0,

form=~slide_num|SubjID)Build our null model (which includes a linear effect of time to account for that long linear trend we saw in the above plot).

nullModel<-lme(

dt~1+slide_num,

aDF_l,

random=~1|SubjID)

summary(nullModel)## Linear mixed-effects model fit by REML

## Data: aDF_l

## AIC BIC logLik

## 31533.89 31566.97 -15762.95

##

## Random effects:

## Formula: ~1 | SubjID

## (Intercept) Residual

## StdDev: 0.4327605 0.4161845

##

## Fixed effects: dt ~ 1 + slide_num

## Value Std.Error DF t-value p-value

## (Intercept) 0.7002197 0.06860064 28759 10.20719 0

## slide_num -0.0005525 0.00001180 28759 -46.82514 0

## Correlation:

## (Intr)

## slide_num -0.062

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -2.83833764 -0.30453025 -0.06975014 0.16771644 88.41714587

##

## Number of Observations: 28800

## Number of Groups: 40lag1Model<-lme(

dt~1+slide_num,

aDF_l,

random=~1|SubjID,

correlation=lag_1_pos_by_subj)

summary(lag1Model)## Linear mixed-effects model fit by REML

## Data: aDF_l

## AIC BIC logLik

## 29359.82 29401.16 -14674.91

##

## Random effects:

## Formula: ~1 | SubjID

## (Intercept) Residual

## StdDev: 0.4325128 0.416731

##

## Correlation Structure: AR(1)

## Formula: ~slide_num | SubjID

## Parameter estimate(s):

## Phi

## 0.2730642

## Fixed effects: dt ~ 1 + slide_num

## Value Std.Error DF t-value p-value

## (Intercept) 0.7026000 0.06869298 28759 10.22812 0

## slide_num -0.0005573 0.00001561 28759 -35.69883 0

## Correlation:

## (Intr)

## slide_num -0.082

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -2.83058935 -0.30722993 -0.07147524 0.16737678 88.29390180

##

## Number of Observations: 28800

## Number of Groups: 40anova(nullModel,lag1Model)## Model df AIC BIC logLik Test L.Ratio p-value

## nullModel 1 4 31533.90 31566.97 -15762.95

## lag1Model 2 5 29359.82 29401.16 -14674.91 1 vs 2 2176.075 <.0001Computing the next three models takes a really long time for each (90 minutes for lag-4), so I’ll parallelize it.

ar_lags<-list(

lag1=lag_1_pos_by_subj,

lag2=lag_2_pos_by_subj,

lag3=lag_3_pos_by_subj,

lag4=lag_4_pos_by_subj)

library(doParallel)registerDoParallel(cores=4)

lag_models<-foreach(

corstruct=ar_lags,

.packages=c('nlme','dplyr'),

.export=c('nullModel'),

.combine=bind_rows,

.multicombine=T

) %dopar% {

aModel<-update(

nullModel,

correlation=corstruct)

aSummary<-summary(aModel)

as_data_frame(list(

model=list(aModel),

summary=list(aSummary)))

}

save(lag_models,file='lag_models.RData',compress=T)

print(lag_models)## Source: local data frame [4 x 2]

##

## model summary

## 1 <S3:lme> <S3:summary.lme, lme>

## 2 <S3:lme> <S3:summary.lme, lme>

## 3 <S3:lme> <S3:summary.lme, lme>

## 4 <S3:lme> <S3:summary.lme, lme>Now we can compare them…

lag_models %>%

mutate(Total_Lags=1:4) %>%

group_by(Total_Lags) %>%

do({

phis<-coef(.$model[[1]]$modelStruct$corStruct,unconstrained=F)

nphis<-paste('lag',1:length(phis))

data.frame(

lag=nphis,

phi=phis

)

}) %>%

spread(lag,phi) %>%

kable(digits=2)## Warning in rbind_all(out[[1]]): Unequal factor levels: coercing to

## character| Total_Lags | lag 1 | lag 2 | lag 3 | lag 4 |

|---|---|---|---|---|

| 1 | 0.27 | NA | NA | NA |

| 2 | 0.23 | 0.18 | NA | NA |

| 3 | 0.20 | 0.14 | 0.15 | NA |

| 4 | 0.18 | 0.12 | 0.11 | 0.19 |

kable(

cbind(

list(Lags=c(1,2)),

anova(lag_models$model[[1]],lag_models$model[[2]])[,c(-1,-2)]),

row.names=F)| Lags | df | AIC | BIC | logLik | Test | L.Ratio | p-value |

|---|---|---|---|---|---|---|---|

| 1 | 5 | 29359.82 | 29401.16 | -14674.91 | NA | NA | |

| 2 | 6 | 28486.57 | 28536.17 | -14237.28 | 1 vs 2 | 875.2539 | 0 |

kable(

cbind(

list(Lags=c(2,3)),

anova(lag_models$model[[2]],lag_models$model[[3]])[,c(-1,-2)]),

row.names=F)| Lags | df | AIC | BIC | logLik | Test | L.Ratio | p-value |

|---|---|---|---|---|---|---|---|

| 2 | 6 | 28486.57 | 28536.17 | -14237.28 | NA | NA | |

| 3 | 7 | 27892.35 | 27950.22 | -13939.17 | 1 vs 2 | 596.2176 | 0 |

kable(

cbind(

list(Lags=c(3,4)),

anova(lag_models$model[[3]],lag_models$model[[4]])[,c(-1,-2)]),

row.names=F)| Lags | df | AIC | BIC | logLik | Test | L.Ratio | p-value |

|---|---|---|---|---|---|---|---|

| 3 | 7 | 27892.35 | 27950.22 | -13939.17 | NA | NA | |

| 4 | 8 | 26898.26 | 26964.40 | -13441.13 | 1 vs 2 | 996.0902 | 0 |

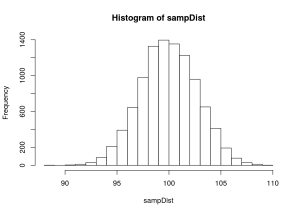

We can look at the predicted correlation matrix:

predicted_ARMA<-corARMA(value=c(.18,.12,.11,.19),p=4,form=~slide_num)

predicted_ARMA_init<-Initialize(predicted_ARMA,data=predict_df)

corMatARMA<-corMatrix(predicted_ARMA_init)

corMatARMA %>%

as.data.frame %>%

mutate(x=1:n()) %>%

gather(y,value,-x,convert=T) %>%

mutate(y=as.numeric(sub('V(.*)','\\1',y))) %>%

filter(value > .01) %>%

ggplot(aes(x=x,y=y))+

geom_tile(aes(fill=(value)))+

theme(

line=element_line(color='white'),

panel.background=element_rect(fill='white'))And we can produce simulated data with our fixed effects estimates for the linear trend as our mu and our correlation matrix as our Sigma for mvrnorm.

predict_df<-data.frame(slide_num=1:720)

predict_df$dt<-predict(

lag_models$model[[4]],

predict_df,

level=0)

library(MASS)

predict_df$dt_err<-mvrnorm(1,mu=scale(predict_df$dt),Sigma=corMatARMA)Our predictions look like this:

predict_df %>%

ggplot(aes(x=slide_num))+

geom_line(aes(y=dt_err))+

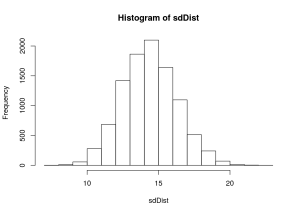

theme(panel.background=element_rect(fill='white'))Our data look like this:

aDF_l %>%

ggplot(aes(x=slide_num,y=dt))+

geom_line()+

facet_wrap(~SubjID,scale='free_y')+

theme(panel.background=element_rect(fill='white'))Not bad, visually speaking. I suspect we could do better if we modeled the the underlying rate parameter for a Poisson process, but that’s a whole other can of worms.

Here’s what happens if we just dummy-variable each element position:

summary(update(nullModel,.~.+as.factor(element_position)))

## Linear mixed-effects model fit by REML

## Data: aDF_l

## AIC BIC logLik

## 31554.64 31612.51 -15770.32

##

## Random effects:

## Formula: ~1 | SubjID

## (Intercept) Residual

## StdDev: 0.4327605 0.4161319

##

## Fixed effects: dt ~ slide_num + as.factor(element_position)

## Value Std.Error DF t-value p-value

## (Intercept) 0.7135225 0.06873087 28756 10.38140 0.0000

## slide_num -0.0005524 0.00001180 28756 -46.81881 0.0000

## as.factor(element_position)2 -0.0202103 0.00693554 28756 -2.91402 0.0036

## as.factor(element_position)3 -0.0161110 0.00693557 28756 -2.32295 0.0202

## as.factor(element_position)4 -0.0170860 0.00693562 28756 -2.46351 0.0138

## Correlation:

## (Intr) sld_nm a.(_)2 a.(_)3

## slide_num -0.061

## as.factor(element_position)2 -0.050 -0.002

## as.factor(element_position)3 -0.050 -0.003 0.500

## as.factor(element_position)4 -0.050 -0.005 0.500 0.500

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -2.82219320 -0.30539798 -0.07029029 0.16628406 88.39624069

##

## Number of Observations: 28800

## Number of Groups: 40