Check out an updated version of this post on RPubs: http://rpubs.com/rosemm/64056

611 Syllabus in R

Here’s the 611 schedule of topics from this year, and some code highlights from each week.

# a toy data set for us to work with

n <- 100

gender <- as.factor(c(rep("M", n/2), rep("F", n/2)))

IQ <- rnorm(n, mean=100, sd=15)

degree <- rep(c("HS", "BA", "MS", "PhD"), n/4)

height <- as.numeric(gender)-2 + rnorm(n, mean=5.5, sd=1)

RT1 <- rchisq(n, 4)

RT2 <- rchisq(n, 4)

DV <- 50 - 5*(as.numeric(gender)-1) + .1*IQ + rnorm(n)

df <- data.frame(awesome=DV, gender=gender, IQ=IQ, degree=degree, height=height, RT1=RT1, RT2=RT2)

Exploratory Data Analysis

head(df)

## awesome gender IQ degree height RT1 RT2

## 1 56.22 M 114.60 HS 6.260 1.965 0.4609

## 2 56.30 M 118.47 BA 5.010 1.516 4.6082

## 3 59.22 M 119.18 MS 4.455 5.396 2.2837

## 4 54.42 M 92.82 PhD 6.796 3.476 7.3616

## 5 53.95 M 92.41 HS 4.052 7.327 4.2431

## 6 58.60 M 115.49 BA 5.155 6.912 6.4011

View(df)

summary(df)

## awesome gender IQ degree height

## Min. :51.3 F:50 Min. : 58.4 BA :25 Min. :2.19

## 1st Qu.:54.8 M:50 1st Qu.: 91.8 HS :25 1st Qu.:4.25

## Median :57.5 Median : 98.1 MS :25 Median :4.86

## Mean :57.4 Mean : 99.2 PhD:25 Mean :4.89

## 3rd Qu.:59.4 3rd Qu.:109.3 3rd Qu.:5.60

## Max. :63.2 Max. :131.1 Max. :7.99

## RT1 RT2

## Min. : 0.467 Min. : 0.371

## 1st Qu.: 2.006 1st Qu.: 2.008

## Median : 4.140 Median : 3.163

## Mean : 4.513 Mean : 4.121

## 3rd Qu.: 6.457 3rd Qu.: 5.301

## Max. :13.278 Max. :20.295

library(psych)

describe(df)

## vars n mean sd median trimmed mad min max range skew

## awesome 1 100 57.39 2.87 57.46 57.31 3.44 51.34 63.16 11.82 0.11

## gender* 2 100 1.50 0.50 1.50 1.50 0.74 1.00 2.00 1.00 0.00

## IQ 3 100 99.21 14.87 98.09 99.78 12.01 58.41 131.05 72.64 -0.34

## degree* 4 100 2.50 1.12 2.50 2.50 1.48 1.00 4.00 3.00 0.00

## height 5 100 4.89 1.16 4.86 4.88 1.08 2.19 7.99 5.80 0.03

## RT1 6 100 4.51 2.94 4.14 4.20 3.23 0.47 13.28 12.81 0.81

## RT2 7 100 4.12 3.13 3.16 3.71 2.18 0.37 20.29 19.92 1.97

## kurtosis se

## awesome -0.94 0.29

## gender* -2.02 0.05

## IQ 0.28 1.49

## degree* -1.39 0.11

## height -0.24 0.12

## RT1 0.03 0.29

## RT2 6.27 0.31

describe(df[,c(1,3)])

## vars n mean sd median trimmed mad min max range skew

## awesome 1 100 57.39 2.87 57.46 57.31 3.44 51.34 63.16 11.82 0.11

## IQ 2 100 99.21 14.87 98.09 99.78 12.01 58.41 131.05 72.64 -0.34

## kurtosis se

## awesome -0.94 0.29

## IQ 0.28 1.49

plot(df$awesome ~ df$IQ)

plot(df$awesome ~ df$gender)

plot(df$awesome ~ df$degree)

plot(df$height ~ df$gender)

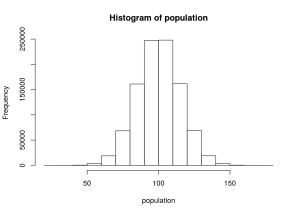

hist(df$IQ)

hist(df$RT1)

cor(df$awesome, df$IQ)

## [1] 0.4604

round(cor(df[,c(1,3,5:7)]),3)

## awesome IQ height RT1 RT2

## awesome 1.000 0.460 -0.419 -0.042 0.091

## IQ 0.460 1.000 -0.026 -0.003 0.142

## height -0.419 -0.026 1.000 0.014 0.142

## RT1 -0.042 -0.003 0.014 1.000 0.012

## RT2 0.091 0.142 0.142 0.012 1.000

var(df$awesome, df$IQ)

## [1] 19.62

table(df[,c(2,4)])

## degree

## gender BA HS MS PhD

## F 12 12 13 13

## M 13 13 12 12

# the big guns

library(ggplot2)

##

## Attaching package: 'ggplot2'

##

## The following object is masked from 'package:psych':

##

## %+%

library(GGally)

ggpairs(df)

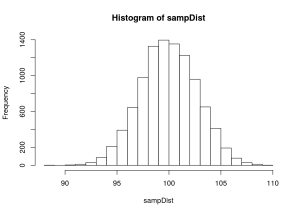

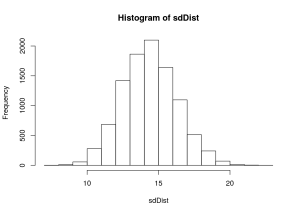

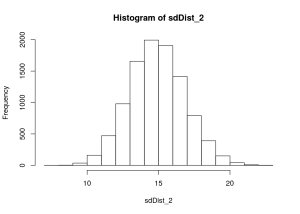

Distributions

qqnorm(df$IQ)

qqline(df$IQ, col="red")

qqnorm(df$RT1)

qqline(df$RT1, col="red")

df$RT1_sqrt <- sqrt(df$RT1)

qqnorm(df$RT1_sqrt)

qqline(df$RT1_sqrt, col="red")

Hypothesis Testing & Estimation: T-tests

# one-sample

t.test(IQ, mu=100, data=df, conf.level=.95)

##

## One Sample t-test

##

## data: IQ

## t = -0.5292, df = 99, p-value = 0.5979

## alternative hypothesis: true mean is not equal to 100

## 95 percent confidence interval:

## 96.26 102.16

## sample estimates:

## mean of x

## 99.21

# IST

t.test(IQ ~ gender, var.equal=TRUE, data=df)

##

## Two Sample t-test

##

## data: IQ by gender

## t = -0.9859, df = 98, p-value = 0.3266

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -8.832 2.969

## sample estimates:

## mean in group F mean in group M

## 97.75 100.68

t.test(x=df[gender=="F",3], y=df[gender=="M",3], var.equal=TRUE)

##

## Two Sample t-test

##

## data: df[gender == "F", 3] and df[gender == "M", 3]

## t = -0.9859, df = 98, p-value = 0.3266

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -8.832 2.969

## sample estimates:

## mean of x mean of y

## 97.75 100.68

# DST

t.test(x=RT1, y=RT2, paired=TRUE, data=df)

##

## Paired t-test

##

## data: RT1 and RT2

## t = 0.9187, df = 99, p-value = 0.3605

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -0.4547 1.2388

## sample estimates:

## mean of the differences

## 0.392

One-Way ANOVAs

aov(awesome ~ degree, data=df)

## Call:

## aov(formula = awesome ~ degree, data = df)

##

## Terms:

## degree Residuals

## Sum of Squares 5.6 807.9

## Deg. of Freedom 3 96

##

## Residual standard error: 2.901

## Estimated effects may be unbalanced

summary(aov(awesome ~ degree, data=df))

## Df Sum Sq Mean Sq F value Pr(>F)

## degree 3 6 1.86 0.22 0.88

## Residuals 96 808 8.42

Two-Way ANOVAs

aov(awesome ~ degree*gender, data=df)

## Call:

## aov(formula = awesome ~ degree * gender, data = df)

##

## Terms:

## degree gender degree:gender Residuals

## Sum of Squares 5.6 472.2 5.8 329.9

## Deg. of Freedom 3 1 3 92

##

## Residual standard error: 1.894

## Estimated effects may be unbalanced

summary(aov(awesome ~ degree*gender, data=df))

## Df Sum Sq Mean Sq F value Pr(>F)

## degree 3 6 2 0.52 0.67

## gender 1 472 472 131.71 <2e-16 ***

## degree:gender 3 6 2 0.54 0.66

## Residuals 92 330 4

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

# regression coefficients output

summary(lm(awesome ~ degree*gender, data=df))

##

## Call:

## lm(formula = awesome ~ degree * gender, data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.517 -1.256 -0.268 1.328 3.795

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 59.5427 0.5466 108.93 < 2e-16 ***

## degreeHS -0.4621 0.7730 -0.60 0.55

## degreeMS 0.4083 0.7580 0.54 0.59

## degreePhD 0.1155 0.7580 0.15 0.88

## genderM -4.5616 0.7580 -6.02 3.6e-08 ***

## degreeHS:genderM 1.0268 1.0720 0.96 0.34

## degreeMS:genderM 0.0397 1.0720 0.04 0.97

## degreePhD:genderM -0.2190 1.0720 -0.20 0.84

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.89 on 92 degrees of freedom

## Multiple R-squared: 0.594, Adjusted R-squared: 0.564

## F-statistic: 19.3 on 7 and 92 DF, p-value: 1.25e-15

Correlation and Regression

cor(df$awesome, df$IQ)

## [1] 0.4604

summary(lm(awesome ~ IQ, data=df))

##

## Call:

## lm(formula = awesome ~ IQ, data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -4.493 -2.538 0.131 2.285 5.202

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 48.5816 1.7345 28.01 < 2e-16 ***

## IQ 0.0888 0.0173 5.13 1.4e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.56 on 98 degrees of freedom

## Multiple R-squared: 0.212, Adjusted R-squared: 0.204

## F-statistic: 26.4 on 1 and 98 DF, p-value: 1.44e-06

Analysis of Categorical Data

table(df[,c(2,4)])

## degree

## gender BA HS MS PhD

## F 12 12 13 13

## M 13 13 12 12

# goodness of fit

chisq.test(table(df$degree)) # are degrees equally distributed?

##

## Chi-squared test for given probabilities

##

## data: table(df$degree)

## X-squared = 0, df = 3, p-value = 1

chisq.test(table(df$degree), p=c(.4, .3, .2, .1)) # is it 40% HS, 30% BS, 20% MS, and 10% PhD?

##

## Chi-squared test for given probabilities

##

## data: table(df$degree)

## X-squared = 30.21, df = 3, p-value = 1.248e-06

# test of independence

chisq.test(table(df[,c(2,4)]))

##

## Pearson's Chi-squared test

##

## data: table(df[, c(2, 4)])

## X-squared = 0.16, df = 3, p-value = 0.9838

# Get measures of association

library(vcd)

## Loading required package: grid

assocstats(table(df[,c(2,4)]))

## X^2 df P(> X^2)

## Likelihood Ratio 0.16004 3 0.98377

## Pearson 0.16000 3 0.98377

##

## Phi-Coefficient : 0.04

## Contingency Coeff.: 0.04

## Cramer's V : 0.04