Easy, Massively Parallel R on UO’s ACISS Cluster

Seriously, guys, seriously. This is amazing.

Imagine a place where you can conjure many computers to run all of the R scripts at once, rather than one after the other. That place is aciss.uoregon.edu (sign up for an account here).

Here are quick links to the stuff I’ll describe below:

- ACISS

- The R package BatchJobs (via github)

- Configuring BatchJobs tutorial

Understanding Processor and Batch Parallelization

Before I get into the details, let me describe how you should be thinking about the kind of parallelizing you’ll do with ACISS. Normally when you run an R script, it does all of its processing on one processor: + (imagine that’s a processor).

If you use the doParallel package, or boot, you may already be aware that sometimes you can run a bunch of parts of an R script, aka a bunch of processes, simultaneously using multiple processors at once on your 4 or 8 core intel CPU*. For example, in a bootstrap situation, you might want to take a bunch of random samples of your data (with replacement) and calculate the statistic of interest on those samples to get a feel for how stable the statistic is. Usually people do this 1000-5000 times. If you were to run each sample one after the other on a single processor, it might take awhile (depending on your statistic of interest). But you can tell boot, ncpus=8 and boot will split up the processes so that each core in your CPU runs some of the statistic calculations: ++++++++. That’ll cut down the time it takes to do the bootstrap by about 8 times.

While cutting down processing time by a factor of 8 is nothing to sneeze at, imagine you had 20 computers, each with a CPU with 12 cores. That’s ACISS, but on an even more massive scale (they have more than 20 computers, called nodes, and they have a bunch of different types of nodes).

So now we’re working with 20 groups of 12 processors: 1[++++++++++++] 2[++++++++++++] … 20[++++++++++++]. Let’s pretend you have a statistic that takes 5 minutes to estimate, and you want to bootstrap it 1000 times. One thing you could do is write a script that runs the bootstrap 50 times, using 12 processor, and then run that script on each of the 20 computers (imagine literally walking around to each of 20 computers and running your R script). A job that would take ~83 hours (5*1000/60) executed serially would take about 20 minutes (5*1000/12/20) using the above method. Holeee smokes.

* One CPU can contain many processors, but usually you only use one at a time.

Designing an R script for BatchJobs

This is an incredibly broad topic, with a lot of heterogeneity in how people implement these things. Here’s just a tiny bit of advice after very little experience. For BatchJobs to work it needs one function that takes a single parameter. You’re also going to give BatchJobs a list of starting values. For each starting value it will request a node, and run your function on the starting value.

starting_values<-1:20

myFunc<-function(start){

#Load data

#Do something to the data, in parallel

#Return a result

}

In the example above, the function never uses the value passed to it, but because there are 20 of them, BatchJobs will create run your function 20 times. You can use doParallel’s foreach %dopar% {} syntax, or boot’s ncpus option to run parallel processes within your function.

The take home: You want to figure out a way to split up your task into some number of jobs, each of which can be independently run, and each of which can be further optimized by parallelizing them across processors. The return result of the function you write should be something that you’ll have an easy time joining with itself, because you’ll get back as many results as there are starting values.

Now go head over and check out this tutorial — that’s where you’ll get the actual nuts and bolts worked out.

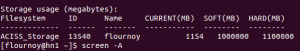

What ACISS looks like…

When you login, I highly recommend you run either tmux or screen. This will create a virtual session that won’t end if you lose your connection. If you use screen, the command is screen -AM. If you get disconnected, simply log in to ACISS again, and run screen -d -r and you’ll be greeted with the session you disconnected from.

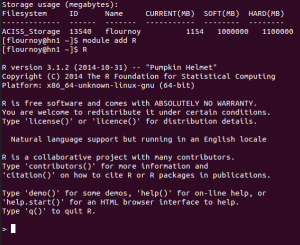

After creating a new screen or tmux session, you need to run module add R to load the default version of R (currently 3.1.2). There are other versions if you need (run module avail).

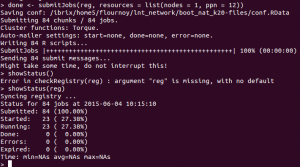

Then you just need to run your BatchJobs script. Once your jobs have been queued, use showStatus(reg) to see how it’s all coming along.

Good luck!

John, Thanks. This is cool. I would love to see a demonstration of this.

No problem. This might be worth a tutorial when we resume in the Fall. It’s such a great resource, and fairly easy to use if you already have some R background. If you have a specific problem you think you could tackle with this, we could demo that, or I could run through what I used it for.

Could it be used to analyze the Yelp Challenge dataset?

Yeah, I think so. The major challenge is figuring out a way of breaking up the analysis into parallel chunks — routines that are all doing the same thing, and that can be combined later. What we couldn’t do is a single multi-level model on the full data set (probably). The computations for such a model would all be limited to a single process, so ACISS wouldn’t buy us anything. But I think there are ways to subsample the data, and perhaps recombine. Do you know what specific questions you’d want to ask?

this looks promising: http://web.cs.ucla.edu/~ameet/blb_icml2012_final.pdf

I also would love to see this demonstrated! Any bootstrapping example would probably be pretty intuitive, and widely applicable. Thanks so much for writing this up, John! It’s a great resource. 🙂

Yeah, maybe later this term. Any statistical issues you want to investigate using simulation methods? I’ve been wondering if selecting a mediating variable by significance threshold inflates the estimate of the mediated effect, but I’m not sure how straightforward that would be to code up.

neat idea! i think that would be pretty straightforward to code, depending on how you want to do the mediation analysis: if you just want to use speedy built-in stuff (e.g. lm() for the models and then Sobel tests for sig), then it would be easy-peasy, but if you want to judge the significance of the mediated effect(s) using bootstrapped CIs, then you might need to get clever about the code so it doesn’t take forever.