Overview

Last month I participated in what was one of the strongest webinars the EDUCAUSE Learning Initiative has put out thus far—a two-hour session on the various means through which the ed tech community is assessing the quality of (new) digital education initiatives. Conceived as part show-and-tell, part commentary on the state of the field, the presenters included Kay Shattuck, Director of Research at Quality Matters; Veronica Diaz, Associate Director of the EDUCAUSE Learning Initiative; and Tanya Joosten, Director of eLearning Research and Development at UW-Milwaukee. There was also an extremely active webinar chat feed, with challenging questions and exciting examples shared by participants from universities across North America.

In the weeks since I logged on for this webinar, I have been thinking about the large gap between observing the shape of a service, or the strengths and limitations of a technological tool, and assessing that service or that tool’s impact–either on faculty or on students. (Hello, Canvas migration.) “Impact” is a word that my composition students often used erroneously, or as a means of saying, “X is important but I don’t know why.” And even after this superb webinar experience, I’m still left wondering about the fuzziness of that word. Is there a better one out there for what we are trying to measure? Something that’s not just a political buzzword with an emotional punch, or code for “let’s make personnel decisions based on standardized test scores”?

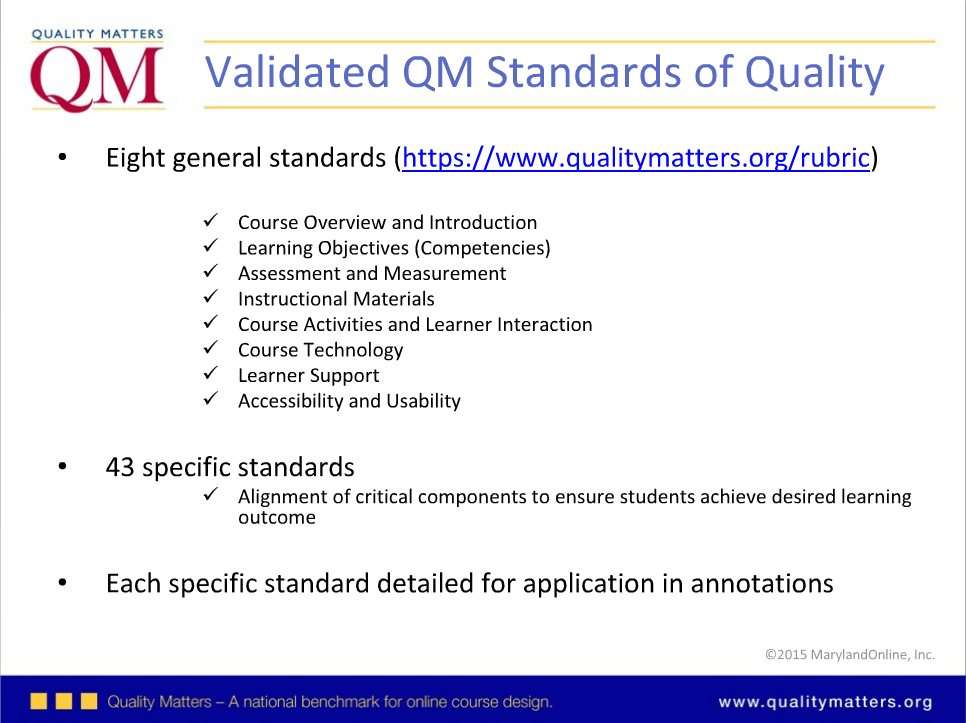

Research on Quality Matters

The first presentation was an overview of the research wing of the Quality Matters Program, and highlighted their ongoing efforts to use data analysis to refine and confirm the success of the QM model. If the QM rubric “sets a threshold for student learning,” as Kay Shattuck noted in her opening slides, then it also serves as a rubric through which an institution can conduct impact analysis. This was appealing to me, in part because UO’s rugged (sometimes even willful) independence has left us with a dearth of maps to follow as we try to move forward with our online programming. Investing in field-led efforts, such as QM, would help provide context for campus decision-making. But as the presentation continued, I was reminded of the limits of using any one rubric as the sole means through which “impact” is assessed.

QM’s own research from recent years makes note of this: “Expecting QM certification to solve all student success alone is unrealistic” (Miner 2014). Nevertheless, the value of QM in providing a framework through which student success can be assessed is clear. As they have determined in a decade of research, adopting a rubric generates an institution-wide common vocabulary, helps improve the pedagogy of those instructors new to online education, increases student satisfaction and engagement, and helps identify the means through which online courses might be made better accessible to all.

Additional Takeaways

- Know what evidence your shareholders want (improved grades, retention, satisfaction, etc).

- There’s a danger in equating improved grades with improved student outcomes. Grades are the easiest marker, but not always the most accurate.

- Ample time is essential for any successful assessment. Don’t try for quick results–be thorough.

ELI Seeking Evidence of Impact

The variable or fuzzy nature of “impact” was a concept that the second presentation embraced, to some extent. Veronica Diaz hopped on to show off ELI’s case study series on “Seeking Evidence of Impact,” and the papers in that growing resource library take on everything from course recommendation applications and MOOCs to classroom seating and the use of various devices in learning. “Impact” is a broad and flexible category, in this sense–and that’s probably to the benefit of newcomers, as they determine not just what to assess, but by what means. To that end, ELI has put together a guide for Seeking Evidence of Impact, a roadmap that can be implemented at any institution.

Additional Takeaways

- Start the process of collecting evidence of impact early on in any new initiative. Integrate that planning into your programming from the outset.

- Be able to answer the following: what are 2-3 key research questions for your teaching and learning work?

- Pilot any assessment plan with key stakeholders–write up a draft and shop it around.

- The order in which you deploy your tools is just as important as what tools you choose to use–be sure to develop a process that makes sense for your institution.

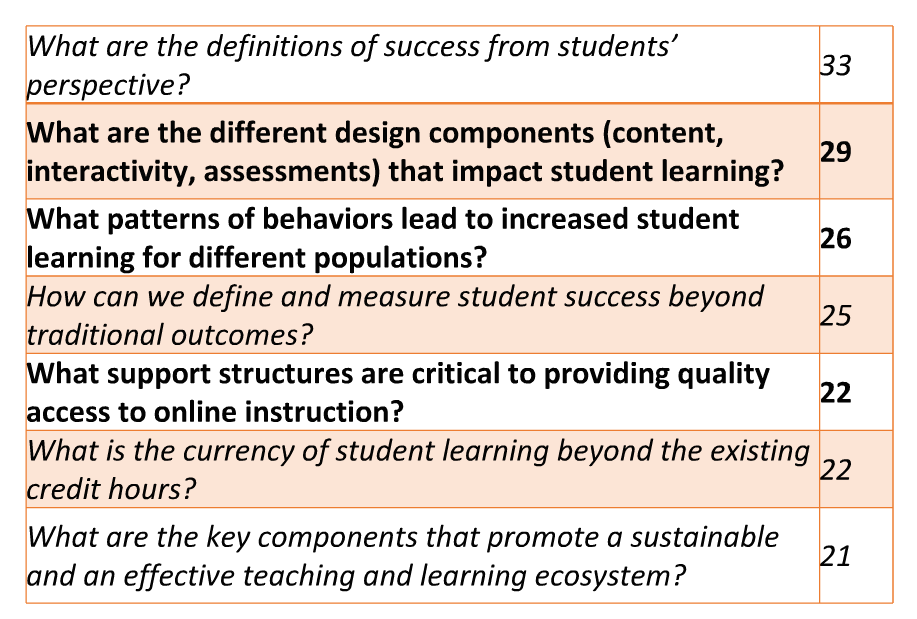

National Research Center for Distance Education and Technological Advancements

The final presentation of the day was on the new National Research Center for Distance Education and Technological Advancements (DETA), a project that is being implemented at UW-Milwaukee (with government grant funding). The question with which Tanya Joosten began her presentation is “How do we ensure all students have access to a quality higher education?” As a former CUNY employee, where I engaged with this question on a daily basis, it was exciting to see what has emerged from this expansive point of view. As one of its first projects, DETA is investigating how faculty development affects online course quality–a topic about which there is much anecdata but a relative lack of statistical analysis–as well as how to identify those instructional and institutional processes that will have a (positive) impact on learning. The goal is to better identify and promote good practices, sharing them through DETA’s data research center.

One of the things I really liked about this presentation was Joosten’s overview of how F2F learning is a false “gold standard.” For too long, the field has been stuck comparing online courses to face-to-face learning, as if the latter were somehow inherently better. This persisted in the research even though we’ve all taken (or even taught) face-to-face courses of variable quality! So now it’s important to develop a standardized vocabulary that will improve cross-institutional analysis, but will also recognize that these various delivery models are functionally equivalent. Right now we keep asking questions about the quality of online education at our campuses, even though study after study shows there is no comparable difference. We need to trust the depth of the field’s knowledge, rather than reinvent the wheel at every college or university across the country.

Q&A

At the end of the three presentations, there was an excellent Q&A.

How do we actually get people to change their [teaching] practice based on research results?

Joosten: Ensure that you have the right people engaged–folks who can implement results. At her institution they brought in administrators from student affairs and student services. Instructional support matters a lot, too.

How will the work around evidence of impact evolve in the next 2-3 years?

Shattuck: People are figuring out that they need to know what’s been done before. Research questions are being refined, and people are reading the preexisting literature.

Diaz: The field is becoming more granular. People are interested in “just in time” analyses, and the goal of adapting on the fly.

Joosten: We are going to have an improved and more coherent understanding of the literature of the past few decades. There will be renewed efforts to understand elements inside courses and institutions, and across institutions, and this will impact student success.

فن آموزان

قیمت آهن

آموزش تعمیرات موبایل

صرافی رمز ارز

بهترین صرافی

فروشگاه اینترنتی باما کالا نمایندگی فروش محصولات بیم با قیمت ارزان و گارانتی اصل

فروشگاه اینترنتی باما کالا نمایندگی فروش جاروبرقی بیم با قیمت ارزان و گارانتی اصل

کرم پودر نوبا مور از محصولات برند نوبا، از بهترین کرم پودرها است.https://arashbeauty.com/product/noubamore-second-skin-foundation/

آموزش تعمیرات موبایل اندروید و آیفون در تهران

قیمت ورق سیاه در مشهد آهن رسان

قیمت ورق در مشهد

قیمت ورق ساختمانی در مشهد

قیمت ورق صنعتی در مشهد

قیمت روز ورق مشهد

hi

i am developer

thanks for this article crypto presale

خرید قلاویز دستی و ماشینی با بهترین قیمت

کلینیک زیبایی فلورا مرکز لیزر موهای زائد در پونک

آموزش تعمیرات موبایل

آموزشگاه توبیکس مجهزترین مرکز آموزشی کشور

کاشت موی طبیعی در تهران

اقامت کاری دبی

وقت سفارت آمریکا

طراحی کابینت

تعمیر ماکروفر ال جی

پمپ دیافراگمی

تحلیل تکنیکال

upvc درب و پنجره

تبدیل پایان نامه به کتاب

خرید پمپ آب پنتاکس

گاوصندوق ایران کاوه

فروسیلیسیم چیست؟

طترجمه فایل صوتی

ترجمه فایل صوتی

قیمت بیسیم کنوود

آنتن دیاموند

گیفت کارت آمازون

کاربرد پنجره بازشو لولایی چیست

https://nama-gostar.com/2023/03/29/%da%a9%d8%a7%d8%b1%d8%a8%d8%b1%d8%af%d9%87%d8%a7%db%8c-%d9%be%d9%86%d8%ac%d8%b1%d9%87-%d8%a8%d8%a7%d8%b2%d8%b4%d9%88-%d9%84%d9%88%d9%84%d8%a7%db%8c%db%8c-%da%86%db%8c%d8%b3%d8%aa%d8%9f/

ثبت شرکت در تبریز

شهروندی ترکیه

خرید گاوصندوق

https://persiansafebox.com/product-category/%d8%a7%d9%86%d9%88%d8%a7%d8%b9-%da%af%d8%a7%d9%88-%d8%b5%d9%86%d8%af%d9%88%d9%82/

کانال اسپیرال گالوانیزه

الماس تراشکاری

خرید سی پی کالااف دیوتی موبایل

قیمت اگزاست فن سانتریفیوژ

https://tjf-co.com/%D9%82%DB%8C%D9%85%D8%AA-%D9%81%D9%86-%D8%B3%D8%A7%D9%86%D8%AA%D8%B1%DB%8C%D9%81%DB%8C%D9%88%DA%98/

خرید گیفت کارت ایکس باکس

معرفی ۵ شغل پردرآمد فنی حرفه ای + مشاغل پولساز

یادگیری تعمیرات موبایل در بهترین آموزشگاه کشور

چاوش جهان سیر

رادین پوشش زرین

هوبر

یدک کش مشهد

امداد باتری در مشهد

مشاوره وام بانکی

امین نژاد

موبایل کالا

آسان شاپ

کد تخفیف

آموزش تعمیرات موبایل

خط تولید ظروف یکبار مصرف شرکت دامون مبنا

بهترین آموزشگاه تعمیرات موبایل در سال 1402

آموزشگاه تعمیرات موبایل سیب شرق

https://sibeshargh.com/

برداشتن خال در کمتر از 10 روز با محلول گیاهی

https://khaldarmani.ir/

برای پاشیدن رنگ بر روی سطوح باید پیستوله رنگ پاش خریداری کنید.

https://abzarmart.com/shop/172-spray-guns/

ىرمان زگیل تناسلی

تعمیرات موبایل سامسونگ مشهد

خرید تار

خرید سه تار

به گزارش وبسایت تعمیرات لوازم خانگی تعمیر 24 چنانچه به دنبال راه حل و آموزش برای عیب یابی و تعمیر ماشین لباسشویی خود هستید 2 گزینه پیش روی شما خواهد بود: راه حل اول عیب را تشخیص دهید و از طریق مطالعه آموزش تعمیر ماشین لباسشویی ، خودتان مشکل را برطرف کنید در راه حل دوم شما می توانید از طریق تماس با نمایندگی تعمیرات لباسشویی و مراجعه تعمیرکار در محل در کوتاه ترین زمان ایراد دستگاه را به دست متخصصین تعمیرگاه تعمیر 24 برطرف کنید.

https://www.farsnews.ir/news/14010318000394/%D8%A2%D9%85%D9%88%D8%B2%D8%B4-%D8%AA%D8%B9%D9%85%DB%8C%D8%B1-%D9%85%D8%A7%D8%B4%DB%8C%D9%86-%D9%84%D8%A8%D8%A7%D8%B3%D8%B4%D9%88%DB%8C%DB%8C

برداشتن خال با اسید گیاهی

برداشتن خال با مواد گیاهی و خلال دندان – برداشتن خال با اسید گیاهی – برداشتن خال با اسید میوه در مشهد – پماد برای از بین بردن خال گوشتی – اسم قطره برداشتن خال گوشتی – محلول از بین بردن خال صورت – اسم پماد برداشتن خال گوشتی – از بین بردن خال صورت در یک روز – از بین بردن خال گوشتی با لاک – داروی از بین بردن خال صورت – بهترین فصل برای برداشتن خال صورت – قطره برای خال گوشتی – برداشتن خال دکتر خیراندیش – از بین بردن خال در منزل

برداشتن خال گوشتی

طلا و جواهر زرین

Thank you very much for the information

. Greetings.

Debe haber sido un seminario web de calidad.

گردنبند طلا زنانه

کت سارافون مجلسی

کافه آموزشگاه